The monster, the second sister and Cinderella : three Magi announcing the era of direct gravitational wave astrometry

On February 11 the LIGO-Virgo collaboration announced the detection of Gravitational Waves (GW). They were emitted about one billion years ago by a Binary Black Hole (BBH) merger and reached Earth on September 14, 2015. The claim, as it appears in the ‘discovery paper’ [1] and stressed in press releases and seminars, was based on “> 5.1 σ significance.” Ironically, shortly after, on March 7 the American Statistical Association (ASA) came out (independently) with a strong statement warning scientists about interpretation and misuse of p-values [2]...

In June we have finally learned [4] that another ‘one and a half ’ gravitational waves from Binary Black Hole mergers were also observed in 2015, where by the ‘half’ I refer to the October 12 event, highly believed by the collaboration to be a gravitational wave, although having only 1.7 σ significance and therefore classified just as LVT (LIGO-Virgo Trigger) instead of GW. However, another figure of merit has been provided by the collaboration for each event, a number based on probability theory and that tells how much we must modify the relative beliefs of two alternative hypotheses in the light of the experimental information. This number, at my knowledge never even mentioned in press releases or seminars to large audiences, is the Bayes factor (BF), whose meaning is easily explained: if you considered a priori two alternative hypotheses equally likely, a BF of 100 changes your odds to 100 to 1; if instead you considered one hypothesis rather unlikely, let us say your odds were 1 to 100, a BF of 104 turns them the other way around, that is 100 to 1. You will be amazed to learn that even the “1.7 sigma” LVT151012 has a BF of the order of ≈ 1010 , considered a very strong evidence in favor of the hypothesis “Binary Black Hole merger” against the alternative hypothesis “Noise”. (Alan Turing would have called the evidence provided by such an huge ‘Bayes factor,’ or what I. J. Good would have preferred to call “Bayes-Turing factor” [5],1 100 deciban, well above the 17 deciban threshold considered by the team at Bletchley Park during World War II to be reasonably confident of having cracked the daily Enigma key [7].)...

|

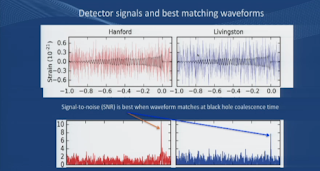

| Figure 3: The Monster (GW150914), Cinderella (LVT151012) and the third sister (GW151226), visiting us in 2015 (Fig. 1 of [4] – see text for the reason of the names). The published ‘significance’ of the three events (Table 1 of [4]) is, in the order, “> 5.3 σ”, “1.7 σ” and “> 5.3 σ”, corresponding to the following p-values: 7.5 × 10-8 , 0.045, 7.5 × 10-8. The log of the Bayes factors are instead (Table 4 of [4]) approximately 289, 23 and 60, corresponding to Bayes factors about 3 × 10125 , 1010 and 1026. |

... even if at a first sight it does not look dissimilar from GW151226 (but remember that the waves in Fig. 3 do not show raw data!), the October 12 event, hereafter referred as Cinderella, is not ranked as GW, but, more modestly, as LVT, for LIGO-Virgo Trigger. The reason of the downgrading is that ‘she’ cannot wear a “> 5σ’s dress” to go together with the ‘sisters’ to the ‘sumptuous ball of the Establishment.’ In fact Chance has assigned ‘her’ only a poor, unpresentable 1.7 σ ranking, usually considered in the Particle Physics community not even worth a mention in a parallel session of a minor conference by an undergraduate student. But, despite the modest ‘statistical significance’, experts are highly confident, because of physics reasons* (and of their understanding of background), that this is also a gravitational wave radiated by a BBH merger, much more than the 87% quoted in [4]. [Detecting something that has good reason to exist , because of our understanding of the Physical World (related to a network of other experimental facts and theories connecting them!), is quite different from just observing an unexpected bump, possibly due to background, even if with small probability, as already commented in footnote 15. And remember that whatever we observe in real life, if seen with high enough resolution in the N-dimensional phase space, had very small probability to occur! (imagine, as a simplified example, the pixel content of any picture you take walking on the road, in which N is equal to five, i.e two plus the RGB code of each pixel).]

Giulio D'Agostini (Submitted on 6 Sep 2016)

Will the first 5-sigma claim from LHC Run2 be a fluke?

In the meanwhile it seems that particle physicists are hard in learning the lesson and the number of graves in the Cemetery of physics ... has increased ..., the last funeral being recently celebrated in Chicago on August 5, with the following obituary for the dear departed: “The intriguing hint of a possible resonance at 750 GeV decaying into photon pairs, which caused considerable interest from the 2015 data, has not reappeared in the much larger 2016 data set and thus appears to be a statistical fluctuation” [57]. And de Rujula’s dictum gets corroborated. [If you disbelieve every result presented as having a 3 sigma, or ‘equivalently’ a 99.7% chance of being correct, you will turn out to be right 99.7% of the times. (‘Equivalently’ within quote marks is de Rujula’s original, because he knows very well that there is no equivalence at all.)] Someone would argue that this incident has happened because the sigmas were only about three and not five. But it is not a question of sigmas, but of Physics, as it can be understood by those who in 2012 incorrectly turned the 5σ into 99,99994% “discovery probability” for the Higgs [58], while in 2016 are sceptical in front of a 6σ claim (“if I have to bet, my money is on the fact that the result will not survive the verifications” [59]): the famous “du sublime au ridicule, il n’y a qu’un pas” seems really appropriate! ...

Seriously, the question is indeed that, now that predictions of New Physics around what should have been a natural scale substantially all failed, the only ‘sure’ scale I can see seems Planck’s scale. I really hope that LHC will surprise us, but hoping and believing are different things. And, since I have the impression that are too many nervous people around, both among experimentalists and theorists, and because the number of possible histograms to look at is quite large, after the easy bets of the past years (against CDF peak and against superluminar neutrinos in 2011; in favor of the Higgs boson in 2011; against the 750 GeV di-photon in 2015, not to mention that against Supersymmetry going on since it failed to predict new phenomenology below the Z0 – or the W? – mass at LEP, thus inducing me more than twenty years ago to gave away all SUSY Monte Carlo generators I had developed in order to optimize the performances of the HERA detectors.) I can serenely bet, as I keep saying since July 2012, that the first 5-sigma claim from LHC will be a fluke. (I have instead little to comment on the sociology of the Particle Physics theory community and on the validity of ‘objective’ criteria to rank scientific value and productivity, being the situation self evident from the hundreds of references in a review paper which even had in the front page a fake PDG entry for the particle [60] and other amenities you can find on the web, like [61].)

Id.

Bayesian anatomy of the 750 GeV fluke

The statistical anomalies at about 750 GeV in ATLAS [1, 2] and CMS [3, 4] searches for a diphoton resonance (denoted in this text as F {for digamma}) at √s = 13 TeV with about 3/fb caused considerable activity (see e.g., Ref. [5, 6, 7]). The experiments reported local significances, which incorporate a look-elsewhere effect (LEE, see e.g., Ref. [8, 9]) in the production cross section of the z, of 3.9σ and 3.4σ, respectively, and global significances, which incorporate a LEE in the production cross section, mass and width of the F, of 2.1σ and 1.6σ, respectively. There was concern, however, that an overall LEE, accounting for the numerous hypothesis tests of the SM at the LHC, cannot be incorporated, and that the plausibility of the F was difficult to gauge.

Whilst ultimately the F was disfavoured by searches with about 15/fb [10, 11], we directly calculate the relative plausibility of the SM versus the SM plus F in light of ATLAS data available during the excitement, matching, wherever possible, parameter ranges and parameterisations in the frequentist analyses. The relative plausibility sidesteps technicalities about the LEE and the frequentist formalism required to interpret significances. We calculate the Bayes-factor (see e.g., Ref. [12]) in light of ATLAS data,

Our main result is that we find that, at its peak, the Bayes-factor was about 7.7 in favour of the F. In other words, in light of the ATLAS 13 TeV 3.2/fb and 8 TeV 20.3/fb diphoton searches, the relative plausibility of the F versus the SM alone increased by about eight. This was “substantial” on the Jeffreys’ scale [13], lying between “not worth more than a bare mention” and “strong evidence.” For completeness, we calculated that this preference was reversed by the ATLAS 13 TeV 15.4/fb search [11], resulting in a Bayes-factor of about 0.7. Nevertheless, the interest in F models in the interim was, to some degree, supported by Bayesian and frequentist analyses. Unfortunately, CMS performed searches in numerous event categories, resulting in a proliferation of background nuisance parameters and making replication difficult without cutting corners or considerable computing power.